TL;DR

As the AI arms race escalates across the geopolitical and technology spheres, headlines gush over OpenAI, Microsoft, Google, Meta, Anthropic, Perplexity, xAI, and Chinese disruptors, and they are right to do so. But the existential threat to American AI dominance is not the latest AI model weights, but access to electrons. In an environment where new infrastructure is characterized by decades of planning, power needs for compute needs are immediate and growing.

To unpack this challenge Congruent recently hosted a panel as part of San Francisco Climate Week featuring Karan Mistry from Boston Consulting Group, Bobby Hollis from Microsoft, and David Ulrey from Fervo Energy. There was consensus that AI compute is driving unprecedented demand for electricity and macroeconomic risks may affect the pace of the data center buildout. We believe that AI compute and the necessary data center infrastructure are the single largest energy investment opportunity in recent years. If approached correctly, the AI data center buildout has the potential to enhance the overall power grid and accelerate our transition to a cleaner energy future while serving the needs of the tech community.

As climate investors, we are seeing the impact of AI firsthand across our portfolio, with multiple companies capitalizing on AI to make the world more efficient and sustainable. These companies include First Street using AI to assess physical climate risk, Fervo leveraging AI compute to unlock vast new resources of clean geothermal power (including for use in Google’s data centers), Pano.AI for AI enabled early detection of wildfires, Camus Energy with AI-based software to accelerate interconnection for data centers and other large loads, and Halcyon AI, which uses large language AI models for better energy-related decision making from vast amounts of published regulatory data.

As we scope the urgency of powering data centers, we have distilled our questions to the following:

1) What is the expected trajectory and timeline of AI data center power demand growth?

2) How is this growth creating new opportunities in energy and climate?

3) Can we scale technologies that deliver strong financial returns and climate benefits – while keeping pace with AI compute demand?

Innovation is in full force with novel business models, software optimizers, and new hardware solutions. If you’re innovating in these areas with a venture-appropriate model, we would love to hear from you.

Framing the AI Data Center Opportunity – and Challenges

According to JPMorgan, the major AI hyperscalers (Alphabet, Amazon, Meta, and Microsoft) spent $199B in capex in 2024, which is more than 4x growth over the past 5 years and estimated to further grow by 34% to $268B this year.1 Remarkably, these four tech companies, which have historically been lauded by investors for their high margins and free cash flow, will spend more on capex than the four largest global mining companies and the four largest global oil and gas companies combined. Market intelligence firm International Data Corporation (IDC) projects that AI-enabled solutions will yield a global cumulative economic impact of $22.3 trillion by 2030, representing approximately 3.7% of the global GDP2. Given the traditionally fierce competition between the hyperscalers and an increasingly multi-polar world where nations are seeking self-sufficiency and resilience, a compute-driven arms race where parties vie to match each other’s capabilities is well underway.

Source: McKinsey and Company

Mobilizing such massive spending on technology infrastructure has precedent. The private sector has invested approximately $2.2T in US broadband infrastructure since 1996.3 In spite of initial overbuilding between 1999 and 2001, investment in additional capacity has been fairly consistent at $70B/yr over the past 20 years with growth accelerating recently to $100B/yr. Incredibly, steady technology advancements have increased throughput of a given fiber bundle by more than 645x since the early 2000s!4 This is because applications driven by data and compute have followed three powerful and mutually reinforcing trends:

· Moore’s Law: The number of transistors that can be packed into an integrated circuit doubles approximately every two years (from Gordon Moore, co-founder of Fairchild and Intel).

· Jevons Paradox: The total consumption of some resources continues to increase as they become more affordable and widespread (from the 1865 book The Coal Question, in which William Jevons observed that the introduction of more efficient steam engines led to more coal demand, not less).

· Metcalfe’s Law: The value of a communications network increases with the square of the number of connected users (from Bob Metcalfe, founder of 3Com and inventor of the Ethernet).

Is AI compute the modern equivalent of the steam engine? As compute power augments and substitutes for cognitive labor, the demand for energy may be virtually limitless. As climate-focused investors, this is the opportunity for us to explore.

Implications for Energy Demand and Supply

Training and running AI models requires specialized graphics processing units (GPUs) crunching billions to trillions of parameters in ways that are far more energy-intensive than the traditional data retrieval, streaming, and communications applications that drove data center growth over the past two decades. At 2.9 watt-hours per ChatGPT request, AI queries are estimated to require 10x the electricity of traditional Google queries.5

Running AI models is faster and more efficient in large data centers, and the larger the data center, the faster and more efficient it is. This has led to dramatic growth in the size of hyperscale data centers, which are 300-1,000MW in capacity vs traditional cloud and edge data centers which were typically below 100MW. A modern hyperscale data center consumes the same amount of power that would be required for 240,000-810,000 average US homes6. Based on publicly available information and expert forecasts, the Electric Power Research Institute (EPRI) recently projected US data center load requirements and growth through 2030:

Source: EPRI

In power terms, EPRI expects data center load growth from approximately 17GW in 2023 to a high case of 57GW in 2030. For context, the US consumed 4 TWh in 2023 (approximately 460GW of average hourly demand) and has seen little to no aggregate load growth over the past two decades. Wood Mackenzie analyzed the combined effects of data centers, domestic manufacturing, electrification of transport, and heating, and projects US electricity demand to increase between 4% and 15% through 2029, with likely additions able to accommodate growth of just 2% per year.7

Why the 2-13% gap in expected demand vs. supply? Although projected growth may seem modest in percentage terms, it presents enormous challenges for the power sector. First, 80% of today’s data center demand is concentrated in just 15 states, with particularly high concentrations in Texas and the Mid-Atlantic. Data centers are sited based on favorable characteristics across a multitude of factors such as existing data connectivity, land and power costs, availability of skilled labor, and reliability and availability of primary and backup power. Future data center growth will likely continue to be concentrated in favorable geographies. Second, AI data center loads are individually massive, requiring costly and time consuming high-voltage interconnection if supplied from the grid or the build out of substantial co-located generation. Third, data centers are critical engines of our modern economy and must function uninterrupted, 24/7 every day of the year, particularly if they are customer facing. In our discussions with hyperscalers and data center developers, four needs overlap and compete as shown in the chart above with “X” marking the ideal power source.

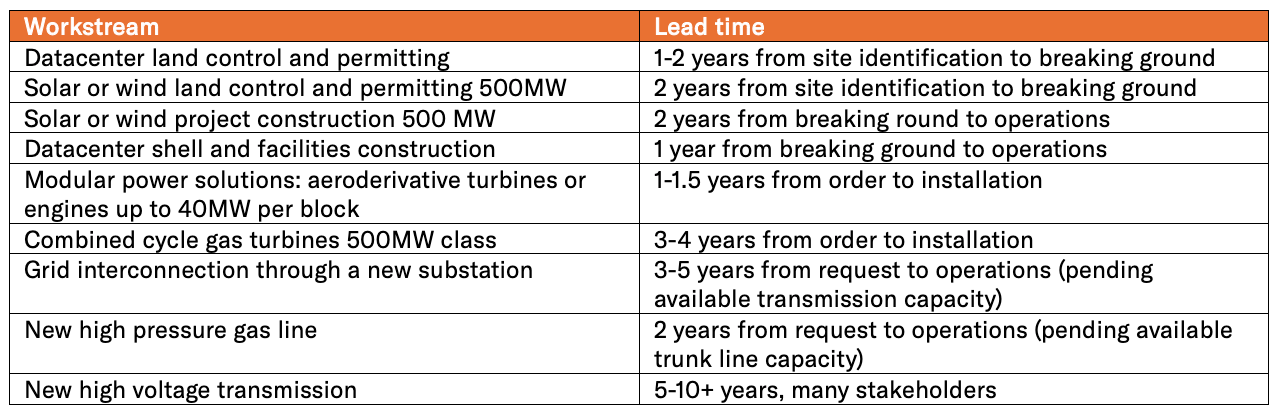

Speed: In the arms race to keep up with the competition in AI computing, speed is on par with reliability for power sourcing criteria. Given the scale, cost and complexity of developing and constructing AI data centers, our understanding from speaking with industry stakeholders is that hyperscalers are currently talking with project developers today for projects that will come online in the 2029 timeframe. This schedule is constrained by the timelines for a 500MW AI compute facility, an example of which is shown below.

As outlined in Congruent’s recent thought piece Uncorking the Interconnection Bottleneck, the median time from requesting new generation grid interconnection to operations was 5 years for projects built in 2023, and this continues to lengthen. In 2022, eastern grid operator PJM froze all new requests until at least 2026. Integrated resource and capacity planning is a slow, back and forth exercise between utilities and regulatory commissioners that seeks to ensure all utility customers (“rate payers”) are treated fairly and that system costs are both manageable and spread equitably across the rate base. The job of utilities and their regulators is further complicated by the uncertainty in AI market growth and the speed at which customers are currently requesting interconnection, making interconnection the key bottleneck for grid interconnected data centers.

Reliability: Data centers are the foundation of the modern economy, from retail commerce to financial transactions to critical communications. The need for high reliability does not appear to be changing for AI compute8 and hyperscalers are seeking 99.99-99.999% uptime from their power source; “five nines” equates to just 5.26 minutes of unplanned down time per year. The reputational and consequential damages of downtime are significant. Incidents with Amazon and Meta in 2021 resulted in revenue losses of over $500k and $200K per minute respectively for those organizations.9,10 EPRI reports that 83% of responding utilities had requests from data center customers for higher reliability than the utility’s typical large industrial customers.11

Cost: While speed to power and reliability are paramount, cost is still important. Stakeholders report willingness to pay up to $125/MWh for firm, clean power that can be brought online in the next several years in desirable geographies. This is almost double the cost of wholesale gas-generated power and several times the cost of intermittent wind/solar power that would be available to these customers in the same geographies and timeframe. JPMorgan estimates that AI data center provider CoreWeave generates $809M of revenue annually per 100MW of compute power at ~70% EBITDA margins. This equates to EBITDA of ~$650 per MWh of energy consumed. With industrial electricity costing $80/MWh on average across the US12, energy is a meaningful operating cost, but not a primary driver of CoreWeave’s overall profitability.

Carbon Intensity: While hyperscalers continue to voice their commitments to reducing and eventually eliminating their carbon emissions, industry stakeholders sheepishly report that carbon intensity is taking a back seat to the three other criteria identified above. Hyperscalers recognize that data centers are long-lived assets and unmitigated carbon emissions introduce financial and customer risks for the massive investments they are making into data centers. Companies are pursuing a variety of strategies such as Microsoft’s plans to work with Constellation Energy in restarting the nuclear facility formerly known as Three Mile Island in Pennsylvania, or Meta’s plans to site with Entergy Louisiana using new gas power paired with renewable power generation. EPRI’s utility survey reported that 57% of utility respondents had requests for 24/7 carbon free energy from data center customers.13

Solutions

Hyperscalers are among the largest and best capitalized companies in the world, with the ability to invest and attract large financing partners to their projects. With concentrated loads of 300-1,000MW, requirements for high reliability and a desire to minimize carbon emissions, the opportunity is compelling across many fronts for solution providers. Although large-scale transmission could theoretically enable data center load growth by better leveraging existing power generation capacity, only 55 miles of high-capacity transmission were built in 202314. The speed of anticipated AI data center deployment calls for new solutions and different ways of thinking. As we consider solutions, we focus on the timeline to technology maturity, and the design and build lifecycle of a data center. Hyperscale data centers costing $10/W to build equate to massive projects that are $5B-10B in scale, so the cycle of integrating new solutions must be started years in advance of construction. We view solutions to quickly and effectively power data centers across a broad spectrum, from initial site selection to operational solutions, and a diverse opportunity set, from innovative business models aligning project stakeholders to new technologies for power generation

Exciting solutions such as small modular nuclear reactors can be game-changers but are likely 5+ years out from first commercial units even for the most advanced startups. These will compete with scaled carbon capture solutions and conventional nuclear generation that may be lower cost. Local power generation with modular gas turbines and engines are much less efficient than conventional generation, but are filling the gap because they can be deployed quickly. These deployments have attracted significant local and environmental opposition for allegedly violating environmental and permitting regulations15. Retrofitting such generation with carbon capture solutions could be a future opportunity across many sites.

xAI’s data center in South Memphis Tennessee with 35 engines generating 422MW:

Photograph: Steve Jones/Flight by Southwings for Southern Environmental Law Center

Increasing the efficiency of the data center itself could mitigate the need for lengthy and costly power grid enhancements. While compute is the single largest load in the data center, cooling is responsible for 30-40% of total data center load. Power use efficiency (PUE) is an industry-wide metric that divides the total energy used by a data center by the energy consumed by the IT equipment alone. While PUE has plateaued at approximately 1.6 over the past decade, leading hyperscalers report a 1.1 PUE16 – indicating potential for industry-wide advances in efficiency

Source: Uptime Institute Global Data Center Survey 2024

Source: ERPI

Flexible interconnect combined with software approaches (like Camus Energy) that can identify and better leverage existing and underutilized infrastructure such as substations, transmission capacity, gas and renewable power assets are appealing since they can compress time and cost. Duke University recently published a study showing that 76 GW of new load—equivalent to 10% of the nation’s current aggregate peak demand— could be integrated with an average annual load curtailment rate of 0.25%17 .We are intrigued by business models that bring together hyperscalers, utilities, and traditional power developers aiming to create win-win partnerships integrating existing infrastructure.

Congruent’s Bull and Bear Perspectives

There are many reasons to believe that AI compute is a generational opportunity and that approaches to powering AI data centers could make for a compelling investment. But there are also uncertainties, complex regulatory landscapes and diverse stakeholder needs to balance. We summarize our Bear and Bull views on this market:

Enablers (Bull Case)

1. Size of opportunity. It’s rare to see so much capital mobilized so quickly on what is fundamentally energy infrastructure, and the hyperscalers are among the largest and best capitalized customers in the world. If approached correctly, the data center buildout has the potential to enhance the power grid and accelerate the deployment of cleaner, advanced energy technologies.

2. Breadth of the opportunity. Related to size is the breadth of approaches and solutions that can be brought to bear on the solution. Innovative business models, software optimizations, and hardware can attack different areas of the problem individually and in concert.

3. Opportunities for partnership. AI compute has attracted the best and brightest from across the globe. Entrepreneurs, large companies, utilities, government and regulators are keen to catalyze solutions and create opportunities. No previous sector has valued speed to power this highly.

Obstacles (Bear Case)

1. Unclear sector growth. Some argue that AI infrastructure is being overbuilt for AI's near-term, commercial applications. If the hyperscalers are unable to monetize their compute investments, growth will pause.

2. Compute efficiency. In January 2025, a Chinese company gained attention for releasing a dramatically more compute-efficient Large Language Model (LLM) called DeepSeek. While the reported results were not as remarkable as initially perceived by the financial markets, improved compute algorithms and efficiency may short circuit the ravenous demand for unbridled power into AI compute.

3. Macro Uncertainty and Tariffs. AI data centers are very costly and have inputs ranging from steel to semiconductors, and concrete to electrical connectors. Construction relies upon broad global supply chains and tariffs, inflation, or fluctuating trade policies create uncertainty that will hinder capital investment into largescale data center infrastructure.

Final Thoughts: What We’re Looking For

We love bold ideas attacking billion-dollar problems and this area seems ripe with opportunity. We have been energized by the conversations we’ve had with entrepreneurs, startups and other stakeholders seeking innovative solutions to quickly and cleanly power AI data centers. Here are a few ideas to get the conversation started:

· Flexible compute and rapid grid response solutions to power management

· Advanced microgrid solutions coordinating diverse behind the meter resources

· More efficient power conversion from high voltage AC to low voltage DC

· Cost effective, modular point source carbon capture

If you are a tech innovator, power developer, entrepreneur, customer or potential corporate partner we would love to collaborate!

Based on an average US home consuming 10,791 kWh/yr in 2022 per the US EIA

Note: Although AI computing consists of two distinct phases: model training and inference, the bulk of energy consumed over a model’s lifetime is expected to running inference applications. Hyperscalers seem focused on building data centers that can accommodate both training and inference vs data centers specialized to the unique needs of each phase.